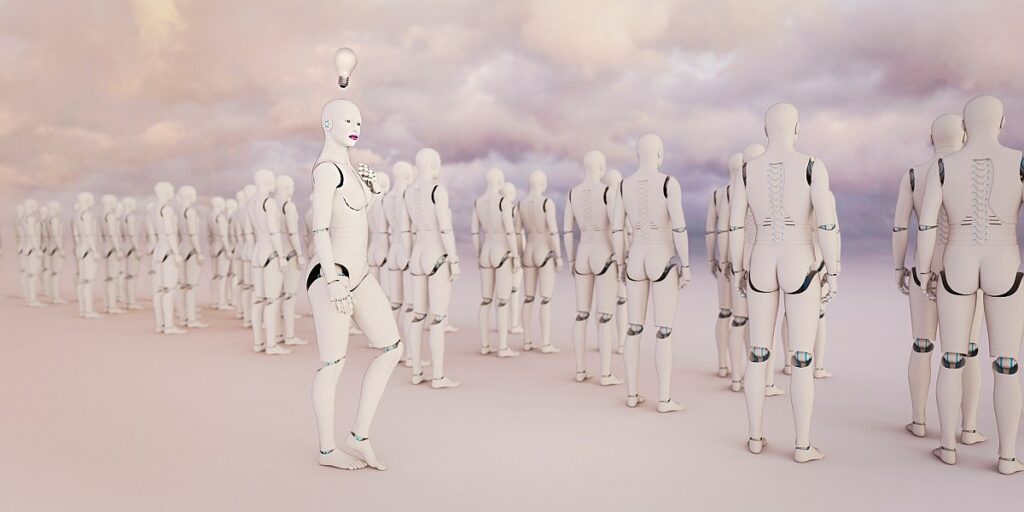

No, you can’t get your AI to ‘admit’ to being sexist, but it probably is anyway

Recent research has highlighted a critical concern regarding large language models (LLMs) and their potential to perpetuate implicit biases, even when their outputs do not overtly reflect biased language. While LLMs like OpenAI’s GPT-3 and others have been designed to generate text that is neutral and inclusive, the underlying mechanisms by which they operate can inadvertently lead to the inference of demographic data, such as race, gender, and socioeconomic status. This inference can shape the responses generated by these models, ultimately revealing implicit biases that may not be immediately apparent.

For instance, researchers have found that when LLMs are prompted with certain terms or phrases, they may produce responses that align with stereotypical assumptions about specific demographic groups. This phenomenon is particularly concerning in applications where these models are used for decision-making or content generation, such as in hiring processes, customer service, or educational contexts. The implications of this bias can be significant, leading to unfair treatment or misrepresentation of individuals based on inferred characteristics rather than their actual qualifications or identities.

To address these challenges, experts advocate for greater transparency in how LLMs are trained and the data they are exposed to. Implementing more robust bias detection and mitigation strategies is essential to ensure that these powerful tools serve all users equitably. Moreover, ongoing dialogue among developers, ethicists, and users is necessary to navigate the complexities of AI-generated content responsibly. As we continue to integrate LLMs into various facets of society, understanding and addressing their potential to propagate implicit biases is crucial for fostering a more inclusive digital environment.

Though LLMs might not use explicitly biased language, they may infer your demographic data and display implicit biases, researchers say.