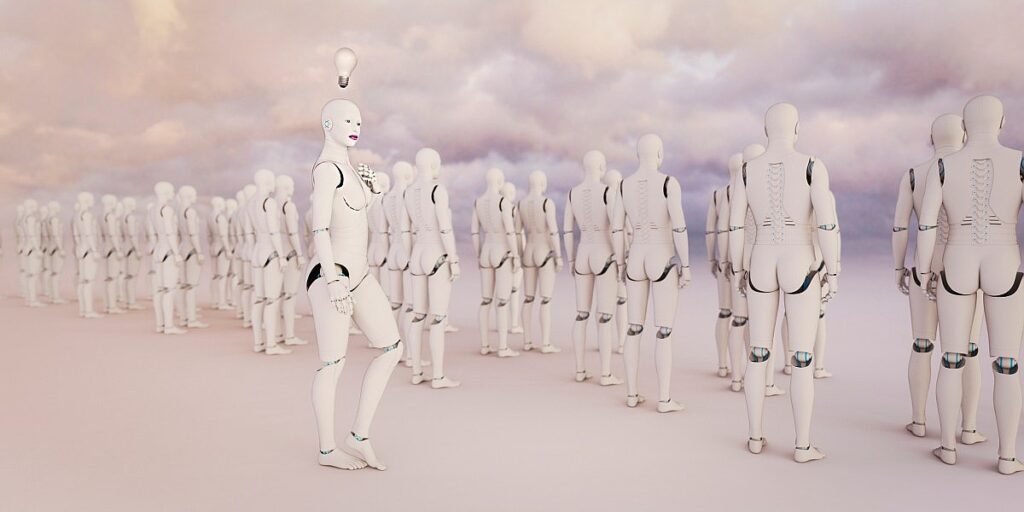

No, you can’t get your AI to ‘admit’ to being sexist, but it probably is anyway

Recent research highlights a critical concern regarding large language models (LLMs) and their potential to perpetuate implicit biases, even when they do not use overtly biased language. While LLMs like ChatGPT and others have made significant strides in natural language processing, their ability to infer demographic data from user interactions raises questions about fairness and ethical use. Researchers have found that these models can inadvertently reflect societal biases by making assumptions based on the language patterns and contexts presented to them, which may lead to skewed or harmful outputs.

For instance, when users engage with LLMs, the models often analyze the language style, word choices, and even the topics of conversation to draw conclusions about the user’s demographic background, such as gender, age, or ethnicity. This implicit inference can result in responses that align with stereotypes or societal biases associated with those demographics. For example, if a user asks a question about a profession that is traditionally gendered, the LLM might respond in a way that reinforces those stereotypes, thereby perpetuating existing biases rather than challenging them. This phenomenon underscores the urgent need for developers to implement more robust safeguards and training protocols to mitigate such biases in LLMs.

Moreover, the implications of these findings are vast, affecting not only individual users but also broader societal dynamics. As LLMs become increasingly integrated into various applications—from customer service bots to educational tools—ensuring they do not inadvertently reinforce harmful stereotypes is paramount. Researchers advocate for a more transparent approach in the development of LLMs, emphasizing the importance of diverse training data and continuous bias evaluation. By addressing these implicit biases, developers can create more equitable AI systems that respect and reflect the diversity of human experiences, ultimately fostering a more inclusive digital environment.

Though LLMs might not use explicitly biased language, they may infer your demographic data and display implicit biases, researchers say.