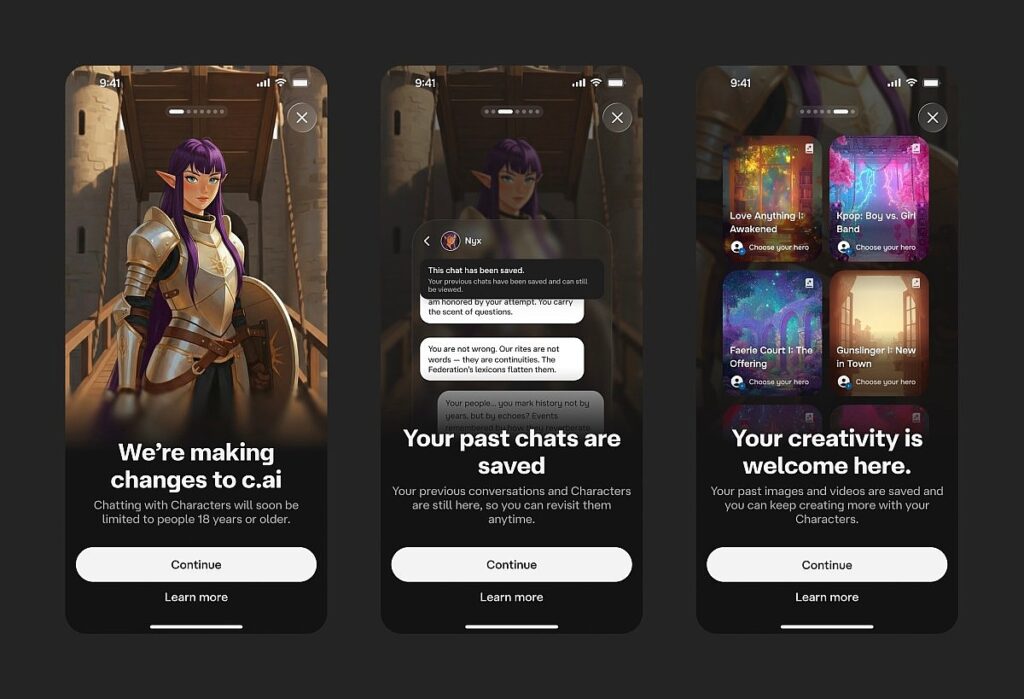

Character AI will offer interactive ‘Stories’ to kids instead of open-ended chat

In a significant move to enhance user safety, the company announced last month that it would prohibit minors from accessing its chat features. This decision comes amid growing concerns about the potential risks associated with online interactions, particularly for younger users. By restricting chat functionalities to adult users only, the company aims to create a more secure environment and mitigate issues such as cyberbullying, inappropriate content, and predatory behavior that can arise in digital communication spaces.

The decision reflects a broader trend among tech companies to prioritize user safety, especially for vulnerable populations like minors. For instance, many platforms are now implementing stricter age verification processes and introducing features that allow parents to monitor their children’s online activities. This move has been met with a mix of support and criticism; while many parents and advocacy groups applaud the initiative for protecting children, some argue that it may limit minors’ ability to connect and communicate with peers in a safe manner. The company emphasizes that this policy change is part of its ongoing commitment to fostering a responsible online community, where users can engage without the fear of encountering harmful interactions.

To further support this initiative, the company is exploring additional safety features and educational resources aimed at both minors and parents. By providing tools that promote digital literacy and responsible online behavior, the company hopes to empower users to navigate the digital landscape safely. As the conversation around online safety continues to evolve, this policy serves as a reminder of the importance of proactive measures in protecting the youth in an increasingly connected world.

The company announced last month that it would no longer allow minors to use its chat features.